Table of contents

Browse categories

Browse authors

AB

ABAlberto Boffi

AL

ALAlessia Longo

AH

AHAl Hoge

AB

ABAljaž Blažun

BJ

BJBernard Jerman

BČ

BČBojan Čontala

CF

CFCarsten Frederiksen

CS

CSCarsten Stjernfelt

DC

DCDaniel Colmenares

DF

DFDino Florjančič

EB

EBEmanuele Burgognoni

EK

EKEva Kalšek

FB

FBFranck Beranger

GR

GRGabriele Ribichini

Glacier Chen

GS

GSGrant Maloy Smith

HB

HBHelmut Behmüller

IB

IBIza Burnik

JO

JOJaka Ogorevc

JR

JRJake Rosenthal

JS

JSJernej Sirk

JM

JMJohn Miller

KM

KMKarla Yera Morales

KD

KDKayla Day

KS

KSKonrad Schweiger

Leslie Wang

LS

LSLoïc Siret

LJ

LJLuka Jerman

MB

MBMarco Behmer

MR

MRMarco Ribichini

ML

MLMatic Lebar

MS

MSMatjaž Strniša

ME

MEMatthew Engquist

ME

MEMichael Elmerick

NP

NPNicolas Phan

OM

OMOwen Maginity

PF

PFPatrick Fu

PR

PRPrimož Rome

RM

RMRok Mesar

RS

RSRupert Schwarz

SA

SASamuele Ardizio

SK

SKSimon Kodrič

SG

SGSøren Linnet Gjelstrup

TH

THThorsten Hartleb

TV

TVTirin Varghese

UK

UKUrban Kuhar

Valentino Pagliara

VS

VSVid Selič

WK

WKWill Kooiker

What is Sensor Fusion?

May 15, 2024

Sensor fusion integrates data from multiple sensors to provide a comprehensive and accurate understanding of the environment or system being monitored or controlled.

People can perform complex tasks like driving a car or playing sports because they have multiple senses, such as hearing, vision, touch, and balance. Our brains can integrate all these inputs and make real-time decisions about what to do under normal and unexpected circumstances. We also have prior experiences with these activities that provide context. We can also add new experiences to our knowledge bases and improve our performance.

Simple machines are built with single sensors. For example, elevators and garage door openers use optical sensors to avoid closing on people.

Advanced machines must be able to integrate and process the outputs of multiple sensors to perform complex tasks like driving a car, flying an airplane, or playing football.

Note: Sensor fusion is sometimes called “multisensor fusion” or “multi-sensor data fusion,” but they are essentially the same.

There are different levels of sensor fusion. High-level sensor fusion refers to integrating and processing data from multiple sensors or sources to extract meaningful information and insights at an abstract level.

Sensor technologies and sensor fusion techniques are growing fast in the automotive industry, spurred by the push toward self-driving cars. But it’s not just cars: sensor fusion is used today in more and more fields, including robotics, defense, medicine, telemedicine, and image processing, just to name a few.

Key sensor fusion technologies

Here are the critical hardware and software technologies behind sensor fusion.

Advanced sensors

Technology advances across various individual sensors are fundamental to sensor fusion. Sensors capture visual, spatial, and temporal information about the environment or object under test, including:

RADAR (Radio Detection and Ranging) sensors can detect objects in front of vehicles moving at high speeds. They also operate very well in poor weather conditions.

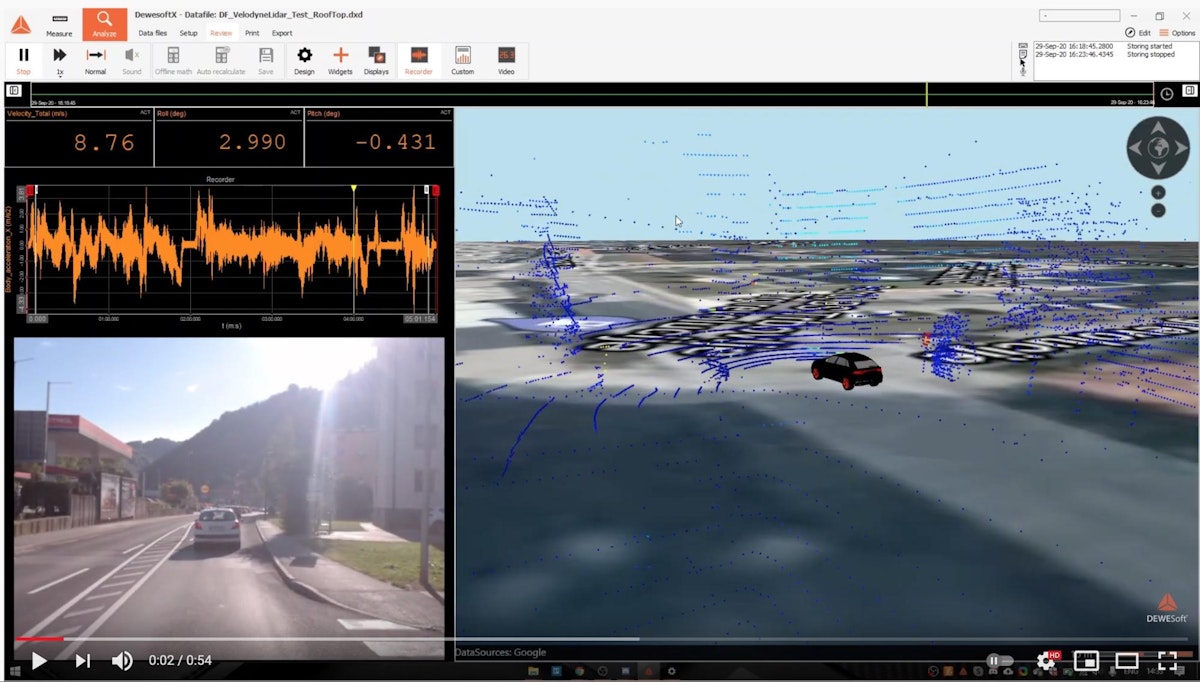

LiDAR (Light Detection and Ranging) sensors provide high-resolution 3D data, allowing for precise mapping of the environment and detection of obstacles, and do not require ambient light to work. LiDAR builds a grayscale 3D map called a “point cloud.”

Multispectral LiDAR combines LiDAR with other sensors, like cameras and spectrometers, to provide additional environmental information, including color. The figure above is an orthographic projection of a point cloud captured by a car. The points are based on signal strength multiplied by range, with orange indicating brighter regions and blue indicating darker regions.

Cameras perform object detection, lane markers, traffic sign recognition and reading, semantic segmentation, and recognition of colors. Combined with OCR algorithms, camera images can “read” text on road signs, extracting critical meaning.

A GNSS (Global Navigation Satellite System) uses satellites overhead to provide accurate navigation. However, they don’t work when tunnels, garages, or tall buildings block the view of the sky.

IMU (Inertial Measurement Unit) sensors can “dead reckon” using internal gyros, accelerometers, and magnetometers. Still, their accuracy will drift over time without an absolute external position reference. Sensor fusion combines the strengths and weaknesses of GNSS and IMU systems and mitigates them. Submarines use IMUs to navigate undersea. GNSS data corrects IMU sensor drift when the sky comes into view.

Localization and mapping

Localization and mapping technologies estimate objects’ position and orientation. SLAM (Simultaneous Localization and Mapping) techniques are used in sensor fusion applications, especially in robotics and autonomous vehicles, to build a map of the surroundings with the sensor platform localized within it.

Visual odometry is a computer vision technique that estimates a vehicle's motion by analyzing camera images. Tracking features between frames calculates a vehicle's relative position and orientation without external sensors. Visual odometry estimates the sensor's ego-motion (e.g., motion relative to the environment) in this way. Visual SLAM extends this concept to calculate the sensor's trajectory and map the environment simultaneously.

Integration and communication

Sensor data integration often involves data formats, protocols, and time synchronization. Integrating heterogeneous sensor data and ensuring seamless communication among sensors and processing units are essential. These include standardized communication protocols (e.g., CAN bus, Ethernet), middleware for data integration, and data synchronization methods.

Signal processing techniques

Signal processing algorithms preprocess, filter, and extract useful information from raw data. This includes noise reduction, feature extraction, and data normalization. These algorithms play a crucial role in preparing sensor data for fusion.

Kalman Filtering: Kalman Filtering is a recursive algorithm used to estimate the state of a dynamic system by integrating noisy sensor measurements with a predictive model.

Bayesian Inference: Bayesian inference is a statistical framework for updating beliefs about the state of a system based on prior knowledge and observed evidence, often used in probabilistic reasoning.

Wavelet Analysis: Wavelet Analysis is a mathematical tool for decomposing signals into different frequency components. It is helpful for feature extraction and denoising in sensor data.

Fourier Transforms: Fourier Transforms are mathematical techniques that decompose signals into frequency components, allowing analysis of periodic and non-periodic phenomena.

Hidden Markov Models: HMMs are probabilistic models used to represent sequences of observations. They are particularly useful for time-series data.

Neural Networks: Neural Networks are machine-learning models inspired by the structure of the human brain. They can detect and learn complex relationships and perform tasks like classification and regression. The types of neural networks most often used in sensor fusion applications are Convolutional Neural Networks, Recurrent Neural Networks, Graph Neural Networks, Deep Belief Networks, and Autoencoders.

Consensus Filtering: Consensus filtering involves iteratively refining estimates by reaching a consensus among multiple sensors. Each sensor or agent provides its estimate, which is then compared and fused with estimates from others. Outlying values are deprecated, while more consistent estimates are valued higher. This iterative process enhances system results.

Sensor fusion applications

Applications for sensor fusion range from medical imaging to robotics and from self-driving cars to industrial automation and control systems.

Data Acquisition Systems

Self-driving Vehicles

Drones

Indoor Navigation

Industrial Automation and Process Control

Medical Imaging

Neural Networks

Robotics

Healthcare

Augmented Reality and Virtual Reality

Defense and Security

Sensor fusion challenges

Using sensor fusion effectively requires more than combining sensors, algorithms, and signal processing. Applying deep domain-specific knowledge to sensor fusion is a key to achieving optimum results from sensor fusion. Several other challenges include:

Data heterogeneity

Sensors differ widely, and data can vary significantly in format, accuracy, precision, and sample rates. Integrating data from different types of sensors (e.g., cameras, LiDAR, RADAR) with diverse characteristics can be challenging. Ensuring consistency and compatibility among heterogeneous data sources is essential to the fusion of heterogeneous sensor data.

Noise and uncertainty

Sensors are prone to noise, inaccuracies, and uncertainties due to environmental factors, hardware limitations, or inherent sensor characteristics. Handling and mitigating these uncertainties is critical to producing reliable and accurate fused outputs. Sensor fusion accuracy depends on techniques such as Kalman filtering, Bayesian inference, and probabilistic modeling to address noise and uncertainty.

Computational complexity

Sensor fusion often involves complex mathematical algorithms and computational processes, especially in real-time applications that require fast data processing. As the number of sensors increases or the complexity of the fusion algorithm grows, the computational demands escalate. Balancing the need for accurate fusion results with computational efficiency is a significant challenge, particularly in resource-constrained environments such as embedded systems or mobile platforms.

Key sensor fusion trends

As more systems gain autonomous capabilities, advances in sensor fusion will continue.

Cross-domain fusion

Cross-domain fusion is one of the most exciting possibilities. Sensor data from different domains, such as IoT devices, social media, and public databases, can be integrated to provide a more holistic understanding of complex systems and phenomena.

Leveraging quantum computing

Engineers can leverage advances in quantum computing to enhance sensor fusion's speed and capabilities. Speed is essential in real-time applications like autonomous vehicles on land, sea, and air, which are critical to human safety. Real-time sensor fusion is a growing requirement.

Leveraging artificial intelligence

Integrating advanced AI and machine learning algorithms will enable more intelligent and adaptive sensor fusion systems to learn from data and improve continuously.

Protection of privacy rights

All of this powerful technology can interfere with personal privacy rights. The industry must ensure that sensor fusion continues to advance while not compromising privacy rights.

Conclusion

By integrating data from multiple sensors and applying advanced signal processing algorithms, sensor fusion empowers advanced machines to perceive and interpret their surroundings with human-like complexity and accuracy.

Challenges persist, from managing the heterogeneity of sensor data to mitigating noise and navigating computational complexities. As sensor fusion continues to evolve, fueled by advancements in sensor technologies, signal processing techniques, data fusion architectures, and artificial intelligence, it promises to unlock unprecedented capabilities in autonomy, intelligence, and cross-domain integration.

Sensor fusion is poised to revolutionize how we interact with and understand the world around us, ushering in a new era of innovation and possibility across diverse industries.