Table of contents

Browse categories

Browse authors

AB

ABAlberto Boffi

AL

ALAlessia Longo

AH

AHAl Hoge

AB

ABAljaž Blažun

BJ

BJBernard Jerman

BČ

BČBojan Čontala

CF

CFCarsten Frederiksen

CS

CSCarsten Stjernfelt

DC

DCDaniel Colmenares

DF

DFDino Florjančič

EB

EBEmanuele Burgognoni

EK

EKEva Kalšek

FB

FBFranck Beranger

GR

GRGabriele Ribichini

Glacier Chen

GS

GSGrant Maloy Smith

HB

HBHelmut Behmüller

IB

IBIza Burnik

JO

JOJaka Ogorevc

JR

JRJake Rosenthal

JS

JSJernej Sirk

JM

JMJohn Miller

KM

KMKarla Yera Morales

KD

KDKayla Day

KS

KSKonrad Schweiger

Leslie Wang

LS

LSLoïc Siret

LJ

LJLuka Jerman

MB

MBMarco Behmer

MR

MRMarco Ribichini

ML

MLMatic Lebar

MS

MSMatjaž Strniša

ME

MEMatthew Engquist

ME

MEMichael Elmerick

NP

NPNicolas Phan

OM

OMOwen Maginity

PF

PFPatrick Fu

PR

PRPrimož Rome

RM

RMRok Mesar

RS

RSRupert Schwarz

SA

SASamuele Ardizio

SK

SKSimon Kodrič

SG

SGSøren Linnet Gjelstrup

TH

THThorsten Hartleb

TV

TVTirin Varghese

UK

UKUrban Kuhar

Valentino Pagliara

VS

VSVid Selič

WK

WKWill Kooiker

Types of ADAS Sensors in Use Today

February 14, 2023

In this article, you will learn about different types of ADAS sensors. We will cover the topic in enough depth that you will:

Understand key types of ADAS sensors in use today

Learn about the sensors and technologies behind ADAS

See how are ADAS sensors used

This is PART 2 of 4 in the ADAS series:

Part 1: What is ADAS?

Part 2: Types of ADAS sensors in use today (this article)

Part 3: How are ADAS systems and autonomous vehicles tested?

Part 4: ADAS standards and safety protocols

Key types of ADAS sensors in use today

To jump straight to the point here is the list of main types of ADAS sensors in use today:

Video cameras

SONAR (aka Ultrasound)

RADAR

LiDAR

GPS/GNSS sensors (satellite interface)

We will look closer look at each of these in the following sections.

A vehicle needs sensors to replace or augment the senses of the human driver. Our eyes are the main sensor we use when driving, but of course, the stereoscopic images that they provide need to be processed in our brains to deduce relative distance and vectors in a three-dimensional space.

We also use our ears to detect sirens, honking sounds from other vehicles, railroad crossing warning bells, and more. All of this incoming sensory data is processed by our brains and integrated with our knowledge of the rules of driving so that we can operate the vehicle correctly and react to the unexpected.

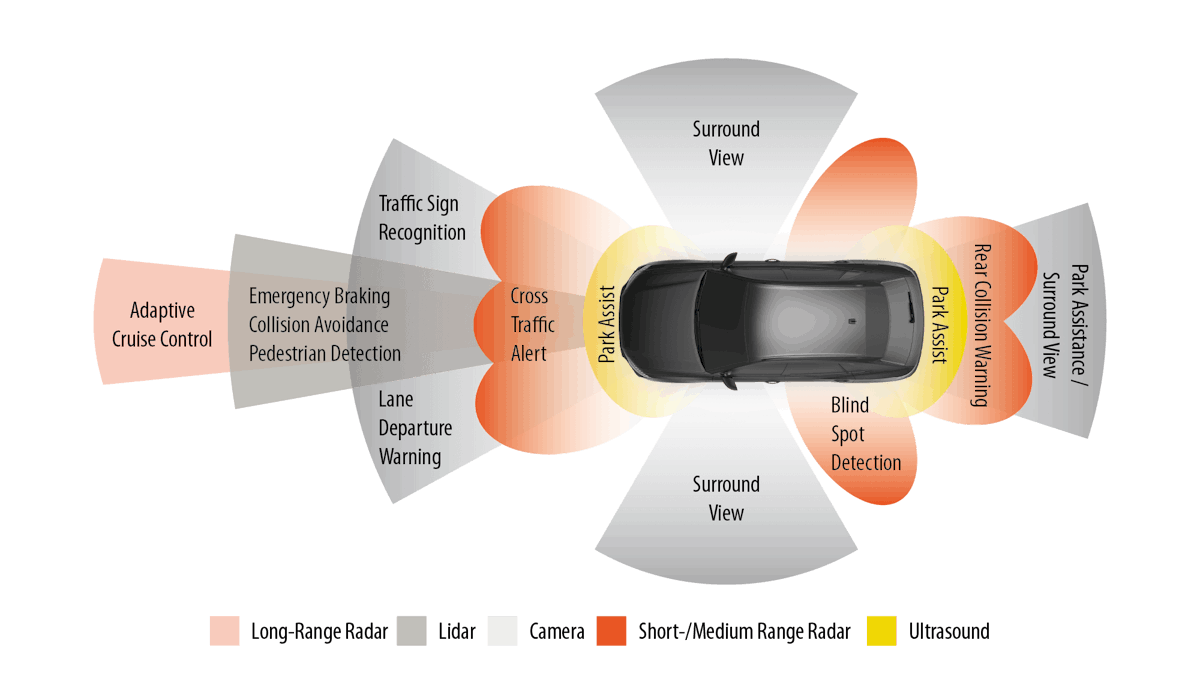

ADAS systems need to do the same. Cars are increasingly being outfitted with RADAR, SONAR, and LiDAR sensors, as well as getting absolute position data from GPS sensors and inertial data from IMU sensors. The processing computers that take in all this information and create outputs to assist the driver, or take direct action, are steadily increasing in power and speed in order to handle the complex tasks involved in driving.

The sheer amount of sensor data that today’s cars and commercial vehicles are handling is staggering, and it’s increasing all the time. Even the impressive numbers in the graphic below will be dwarfed by the fully autonomous vehicles of the future.

Video cameras (aka optical image sensors)

The first use of cameras in automobiles was the backup camera, aka “reverse camera.” Combined with a flat video screen on the dashboard, this camera allows drivers to more safely back up into a parking space, or when negotiating any maneuver that involves driving in reverse. But the primary initial motivation was to improve pedestrian safety. According to the Department of Transportation, more than 200 people are killed and at least 12,000 more are injured each year because a car backed into them. These victims are mostly children and older people with limited mobility.

Once only installed in high-end cars, backup cameras have been required in all vehicles sold in the USA since May 2018. Canada adopted a similar requirement. The European Commission is moving toward requiring a reversing camera or monitoring system in all cars, vans, trucks, and buses in Europe by 2022. The Transport Ministry in Japan is requiring backup sensors (a camera, ultrasonic sensors, or both) on all automobiles sold in Japan by May 2022.

But in today’s ADAS vehicles there can be multiple cameras, pointing in different directions. And they’re not just for backup safety anymore: their outputs are used to build a three-dimensional model of the vehicle’s surroundings within the ADAS computer system. Cameras are used for traffic sign recognition, reading lines and other markings on the road, detecting pedestrians, obstructions, and much more. They can also be used for security purposes, rain detection, and other convenience features as well.

In this screenshot from a Mobileye the camera system, you can see how the system identifies and labels vehicles and pedestrians, and determines that the traffic light is green.

The output of most of these cameras is not visible to the driver. Rather, it feeds into the ADAS computer system. These systems are programmed to process the stream of images and identify stop signs, for example, and to understand that another vehicle is signaling a right turn and that a traffic light has just turned yellow, and more. They are heavily used to detect road markings, which is critical for lane-keeping assistance. This is a huge amount of data and processing power, and it’s only increasing in the march toward self-driving cars.

Several sensor types are in use today, principally CMOS and CCD. CCD sensors offer superior dynamic range and resolution. However, CMOS sensors do not require as much power and can be less expensive due to their silicon architecture. Both technologies are built around a rectangular array of pixels that output a current in proportion to the intensity of the light focused on that pixel.

Many vehicle cameras have been adapted to see better in the dark than human beings can, substituting a white sensor for the typical green one in an RGB optical sensor. Major makers of vehicle camera sensors include Mobileye (an Israeli company bought for $15.3 billion by Intel in 2017), and OmniVision. Mobileye has stated that if a human can drive a car using only vision, then so can a computer. And unlike a human driver, cameras can be looking in all directions at the same time.

Watch this video produced by Mobileye, demonstrating a fully autonomous, unedited 40-minute drive through Jerusalem, using their 12-camera system. A driver was at the wheel for safety, but he never touches the controls until the demonstration ends.

Tesla has decided to use RADAR-informed passive optical sensors (video cameras) instead of LiDAR. Their position is that because it operates by projecting photons in the visual spectrum, LiDAR is susceptible to interference by occlusion from rain, dust, snow, etc. Of course, so are cameras, but Tesla uses RADAR which can see through these occlusions to imprint the range of detected objects onto the 3D world created by the cameras.

Vision is absolutely required for human beings to drive a car, but there are other ways to “see.” For example, bats, whales, dolphins, and other animals use a form of echolocation to navigate. Submarines emanate SONAR (sound pressure waves) and then measure their return after bouncing off the ocean floor and other objects. Aircraft use RADAR to similarly bounce radio frequency signals to detect and measure the distance to the ground, other aircraft, etc.

Bouncing waves off of surrounding objects and then measuring their return times is at the center of range-finding sensors. RADAR uses radio waves, LiDAR uses light, and SONAR (aka ultrasound aka ultrasonic) uses acoustic sound energy. All of these sensing methodologies are being used in various combinations by today’s ADAS-equipped vehicles.

Even Mobileye, which is at the forefront of using cameras developing in parallel with LiDAR sensors, building up two completely independent systems for creating a real-time model of the environment that leverages the best of both technologies.

SONAR / ultrasound sensors

SONAR (Sound Navigation and Ranging), aka “ultrasound” sensors generate high-frequency audio on the order of 48 kHz, more than twice as high as the typical human hearing range. (Interestingly, many dogs can hear these sensors, but they don’t seem bothered by them.) When instructed by the car’s ECU, these sensors emit an ultrasonic burst, and then they “listen” for the returning reflections from nearby objects.

By measuring the reflections of this audio, these sensors can detect objects that are close to the vehicle. Ultrasound sensors are heavily used in backup detection and self-parking sensors in cars, trucks, and buses. They are located on the front, back, and corners of vehicles.

Since they operate by moving the air and then detecting acoustic reflections, they are ideal for low-speed applications, when the air around the vehicle is typically not moving very fast. Because they are acoustic in nature, ultrasound performance can be degraded by exposure to an extremely noisy environment.

Ultrasound sensors have a limited range compared to RADAR, which is why they are not used for measurements requiring distance, such as automated cruise control or high-speed driving. But if the object is within 2.5 to 4.5 meters (8.2 to 14.76 feet) of the sensor, ultrasound is a less expensive alternative to RADAR. Ultrasound sensors are not used for navigation because their range is limited, and they cannot detect objects smaller than 3 cm (1.18 in.).

Interestingly, electric car maker Tesla invented a way to project ultrasound through metal, allowing them to hide these sensors all over their cars, to maintain vehicle aesthetics. The round disks on the bumpers of the car in the picture above are ultrasound sensors.

RADAR sensors

RADAR (Radio Detection and Ranging) sensors are used in ADAS-equipped vehicles for detecting large objects in front of the vehicle. They often use a 76.5 GHz RADAR frequency, but other frequencies from 24 GHz to 79 GHz are also used.

Two basic methods of RADAR detection are used:

direct propagation

indirect propagation

In both cases, however, they operate by means of emitting these radio frequencies and measuring the propagation time of the returned reflections. This allows them to measure both the size and distance of an object and its relative speed.

Applications for automotive RADAR

Because RADAR signals can range 300 meters in front of the vehicle, they are particularly important during highway speed driving. Their high frequencies also mean that the detection of other vehicles and obstacles is very fast. Additionally, RADAR can “see” through bad weather and other visibility occlusions. Because their wavelengths are just a few millimeters long, they can detect objects of several cm or larger.

RADAR is especially good at detecting metal objects, like cars, trucks, and buses. As the image above shows, they are essential for collision warning and mitigation, blind-spot detection, lane change assistance, parking assistance, adaptive cruise control (ACC), and more.

LiDAR sensors

LiDAR (Light Detection and Ranging) systems are used to detect objects and map their distances in real-time. Essentially, LiDAR is a type of RADAR that uses one or more lasers as the energy source. It should be noted that the lasers used are the same eye-safe types used at the check-out line in grocery stores.

High-end LiDAR sensors rotate, emitting eye-safe laser beams in all directions. LiDAR employs a “time of flight” receiver that measures the reflection time.

An IMU and GPS are typically also integrated with the LiDAR so that the system can measure the time it takes for the beams to bounce back and factor in the vehicle’s displacement during the interim, to construct a high-resolution 3D model of the car’s surroundings called a “point cloud.” Billions of points are captured in real-time to create this 3D model, scanning the environment up to 300 meters (984 ft.) around the vehicle, and within a few centimeters (~1 in.) of accuracy.

LiDAR sensors can be equipped with up to 128 lasers inside. The more lasers, the higher the resolution 3D point cloud can be built.

In addition to the spinning LiDAR technology invented by Velodyne, there are solid-state LiDARs on the market today. In fact, Velodyne itself offers several solid-state LiDAR sensors. Another developer is Quanergy, which makes a CMOS-based LiDAR that uses optical phased array technology to guide each laser pulse in a chosen direction rather than spinning a mirror.

LiDAR can detect objects with much greater precision than RADAR or Ultrasound sensors, however, their performance can be degraded by interference from smoke, fog, rain, and other occlusions in the atmosphere. But, because they operate independent of ambient light (they project their own light), they are not affected by darkness, shadows, sunlight, or oncoming headlights.

LiDAR sensors are typically more expensive than RADAR because of their relative mechanical complexity. They are increasingly used in conjunction with cameras because LiDAR cannot detect colors (such as the ones on traffic lights, red brake lights, and road signs), nor can they read the text as well as cameras. Cameras can do both of those things, but they require more processing power behind them to perform these tasks.

GPS/GNSS sensors

In order to make self-driving vehicles a reality, we require a high-precision navigation system. Vehicles today are using the Global Navigation Satellite System (GNSS). GNSS is more than just the “GPS” that everyone knows about.

GPS, which stands for Global Positioning System is a constellation of more than 30 satellites circling the planet. Each satellite emits extremely accurate time and position data continuously. When a receiver gets usable signals from at least four of these satellites, it can triangulate its position. The more usable signals it gets, the more accurate the results.

But GPS is not the only global positioning system. There are multiple constellations of GNSS satellites orbiting the earth right now:

GPS - USA

GLONASS - Russia

Galileo - Europe

BeiDou - China

The best GNSS systems installed in today’s vehicles have the ability to utilize two or three of these constellations. Using multiple frequencies provides the best possible performance because it reduces errors caused by signal delays, which are sometimes caused by atmospheric interference. Also, because the satellites are always moving, tall buildings, as well as hills and other obstructions, can block a given constellation at certain times. Therefore, being able to access more than one constellation mitigates this interference.

Here is the frequency span of today’s most popular GNSS constellations:

Consumer-type (non-military) GNSS provides positional accuracy of about one meter (39 inches). This is fine for the typical navigation system in a human-operated vehicle. But for real autonomy, we need centimetre-level accuracy.

GNSS accuracy can be improved using a regional or localized augmentation system. There are both ground and space-based systems in use today that provide GNSS augmentation. Ground-based augmentation systems are known collectively as GBAS, while satellite or space-based augmentation systems are known collectively as SBAS.

Just a few of the SBAS systems in use today:

WAAS (Wide Area Augmentation System) - run by the FAA in the USA, this system uses a system of ground reference stations located around the world to transmit correction values to WAAS satellites. WAAS-capable GNSS receivers can use this data to improve GPS accuracy.

EGNOS (European Geostationary Navigation Overlay Service) - an SBAS developed by the European Space Agency. This correction system will be integrated into Europe’s Galileo GNSS in the future.

MTSAT (Multi-functional Satellite Augmentation System) - an SBAS developed by Japan

StarFire - SBAS developed by John Deere company primarily for farm vehicles, which are heavy GPS users

OmniStar - a commercial, subscription-based SBAS that covers most of the earth

Atlas - a commercial, subscription-based SBAS that covers most of the earth

Some of the GBAS systems in use today:

DGPS (Differential GPS) is a GBAS often installed at airports to provide very accurate aircraft positioning on the approaches and runways. (A GBAS-based Landing System is called a GLS.) Many aircraft from Boeing and Airbus are equipped with GLS auto-landing systems.

NDGPS (Nationwide Differential GPS System) is a GBAS available on roads and waterways, run by the FHA (Federal Highway Administration) in the USA.

A great example of how GNSS, IMU, and augmentation systems are being integrated into ADAS sensors today is the INS1000 GNSS sensor from ACEINNA. It is a GNSS with dual-frequency L1/L2 RTK and an IMU-based on internal MEMS gyros and accelerometers. It is compatible with GPS, GLONASS, Beidou, and Galileo GNSS constellations as well as SBAS augmentation.

With RTK correction they claim positional accuracy of 2 cm (0.78 inches).

A few examples of how GNSS and other ADAS sensors work together

When we drive into a covered parking garage or tunnel, the GNSS signals from the sky are completely blocked by the roof. IMU (inertial measurement unit) sensors can sense changes in acceleration in all axes, and perform a “dead reckoning” of the vehicle’s position until the satellites return. Dead reckoning accuracy drifts over time, but it is very useful for short durations when the GNSS system is “blind.”

Driving under any conditions, cameras, LiDAR, SONAR, and RADAR sensors can provide the centimeter-level positional accuracy that GNSS simply cannot (without correction from an RTK). They can also sense other vehicles, pedestrians, et al - something that GNSS is not meant to do because the satellites are not sensors - they simply report their time and position very accurately.

In cities, the buildings create a so-called “urban canyon” where GNSS signals bounce around, resulting in multipath interference (the same signal reaches the GNSS antenna at different times, confusing the processor). The IMU can dead-reckon under these conditions to provide vital position data, while the other sensors (cameras, LiDAR, RADAR, and SONAR) continue to sense the world around the vehicle on all sides.

Thoughts about ADAS sensors

A human-operated car works because we have stereoscopic vision, and are able to deduce relative distance and velocity in our brains. Even with one eye closed, we can deduce distance and size using monocular vision fairly accurately because our brains are trained by real-world experience.

Our eyes and brains also allow us to read and react to signs and follow maps, or simply remember which way to go because we know the area. We know how to check mirrors quickly so that we can see in more than one direction without turning our heads around.

Our brains know the rules of driving. Our ears can hear sirens, honking, and other sounds, and our brains know how to react to these sounds in context. Just driving a short distance to buy some milk and bread, a human driver makes thousands of decisions, and makes hundreds of mechanical adjustments, large and small, using the vehicle’s hand and foot controls.

Replacing the optical and aural sensors connected to a brain made up of approximately 86 billion neurons is no easy feat. It requires a suite of sensors and very advanced processing that is fast, accurate, and precise. Truly self-driving cars are being developed to learn from their experience just as human beings do, and to integrate that knowledge into their behavior.

Each of the sensors used in ADAS vehicles has strengths and weaknesses - advantages and disadvantages:

LiDAR is great for seeing in 3D and does well in the dark, but it can’t see color. LiDAR can detect very small objects, but its performance is degraded by smoke, dust, rain, etc. in the atmosphere. They require less external processing than cameras, but they are also more expensive than cameras.

Cameras can see whether the traffic light is red, green, amber, or other colors. They’re great at “reading” signs and seeing lines and other markings. But they are less effective at seeing in the dark, or when the atmosphere is dense with fog, rain, snow, etc. They also require more processing than LiDAR.

RADAR can see farther up the road than other range-finding sensors, which is essential for high-speed driving. They work well in the dark and in when the atmosphere is occluded by rain, dust, fog, etc. They can’t make models as precisely as cameras or LiDARs or detect very small objects as other sensors can.

SONAR sensors are excellent at close proximity range-finding, such as parking maneuvers, but not good for distance measurements. They can be disturbed by wind noise, so they don’t work well at high vehicle speeds.

GNSS, combined with a frequently updated map database, is essential for navigation. But raw GNSS accuracy of a meter or more is not sufficient for fully autonomous driving, and without a line of sight to the sky, they cannot navigate at all. For autonomous driving, they must be integrated with other sensors, including IMU, and be augmented with an RTK, SBAS, or GBAS system.

IMU systems provide the dead-reckoning that GNSS systems need when the line of sight to the sky is blocked or disturbed by signal multipath in the “urban canyon.”

These sensors complement each other and allow the central processor to create a three-dimensional model of the environment around the vehicle, to know where to go and how to get there, to follow the rules of driving, and react to the expected and unexpected that happens on every roadway and parking lot.

In short, we need them all, or a combination of them, to achieve ADAS and eventually, autonomous driving.

Summary

When you were a child, did you ever think your family car would be outfitted with RADAR and SONAR as airplanes and submarines had? Did you even know what LiDAR was? Did you imagine flat-screen displays dominating the dashboard and a navigation system connected to satellites in space? It would have seemed like science fiction, and utterly out of reach for 100 years at least. But today, all of that and more are a reality.

ADAS is the single-most-important type of development going on today. Of course, there are hybrid and electric power developments going in parallel, which are also extremely important for reducing greenhouse gases and the use of fossil fuels. It’s hard to name an automobile maker that isn’t already selling electric versions of their cars or is in the late stages of development.

But ADAS goes directly to the most important aspect of travel: human safety. Since more than 90% of road accidents, injuries and fatalities are due to human error, every advancement in ADAS has a clear and absolute effect on preventing injuries and deaths.

This is PART 2 of 4 in the ADAS series:

Part 1: What is ADAS?

Part 2: Types of ADAS sensors in use today (this article)

Part 3: How are ADAS systems and autonomous vehicles tested?

Part 4: ADAS standards and safety protocols